Introduction

Overview

Teaching: 5 min

Exercises: 10 min

Compatibility:Questions

What is ESMValTool?

Who are the people behind ESMValTool?

Objectives

Familiarize with ESMValTool

Synchronize expectations

What is ESMValTool?

This tutorial is a first introduction to ESMValTool. Before diving into the technical steps, let’s talk about what ESMValTool is all about.

What is ESMValTool?

What do you already know about or expect from ESMValTool?

ESMValTool is…

EMSValTool is many things, but in this tutorial we will focus on the following traits:

✓ A tool to analyse climate data

✓ A collection of diagnostics for reproducible climate science

✓ A community effort

A tool to analyse climate data

ESMValTool takes care of finding, opening, checking, fixing, concatenating, and preprocessing CMIP data and several other supported datasets.

The central component of ESMValTool that we will see in this tutorial is the recipe. Any ESMValTool recipe is basically a set of instructions to reproduce a certain result. The basic structure of a recipe is as follows:

- Documentation with relevant (citation) information

- Datasets that should be analysed

- Preprocessor steps that must be applied

- Diagnostic scripts performing more specific evaluation steps

An example recipe could look like this:

documentation:

title: This is an example recipe.

description: Example recipe

authors:

- lastname_firstname

datasets:

- {dataset: HadGEM2-ES, project: CMIP5, exp: historical, mip: Amon,

ensemble: r1i1p1, start_year: 1960, end_year: 2005}

preprocessors:

global_mean:

area_statistics:

operator: mean

diagnostics:

hockeystick_plot:

description: plot of global mean temperature change

variables:

temperature:

short_name: tas

preprocessor: global_mean

scripts: hockeystick.py

Understanding the different section of the recipe

Try to figure out the meaning of the different dataset keys. Hint: they can be found in the documentation of ESMValTool.

Solution

The keys are explained in the ESMValTool documentation, in the

Recipe section, under datasets

A collection of diagnostics for reproducible climate science

More than a tool, ESMValTool is a collection of publicly available recipes and diagnostic scripts. This makes it possible to easily reproduce important results.

Explore the available recipes

Go to the ESMValTool Documentation webpage and explore the

Available recipessection. Which recipe(s) would you like to try?

A community effort

ESMValTool is built and maintained by an active community of scientists and software engineers. It is an open source project to which anyone can contribute. Many of the interactions take place on GitHub. Here, we briefly introduce you to some of the most important pages.

Meet the ESMValGroup

Go to github.com/ESMValGroup. This is the GitHub page of our ‘organization’. Have a look around. How many collaborators are there? Do you know any of them?

Near the top of the page there are 2 pinned repositories: ESMValTool and ESMValCore. Visit each of the repositories. How many people have contributed to each of them? Can you also find out how many people have contributed to this tutorial?

Issues and pull requests

Go back to the repository pages of ESMValTool or ESMValCore. There are tabs for ‘issues’ and ‘pull requests’. You can use the labels to navigate them a bit more. How many open issues are about enhancements of ESMValTool? And how many bugs have been fixed in ESMValCore? There is also an ‘insights’ tab, where you can see a summary of recent activity. How many issues have been opened and closed in the past month?

Conclusion

This concludes the introduction of the tutorial. You now have a basic knowledge of ESMValTool and its community. The following episodes will walk you through the installation, configuration and running your first recipes.

Key Points

ESMValTool provides a reliable interface to analyse and evaluate climate data

A large collection of recipes and diagnostic scripts is already available

ESMValTool is built and maintained by an active community of scientists and developers

Quickstart guide

Overview

Teaching: 2 min

Exercises: 8 min

Compatibility:Questions

What is the purpose of the quickstart guide?

How do I load and check the ESMValTool environment?

How do I configure ESMValTool?

How do I run a recipe?

Objectives

Understand the purpose of the quickstart guide

Load and check the ESMValTool environment

Configure ESMValTool

Run a recipe

What is the purpose of the quickstart guide?

- The purpose of the quickstart guide is to enable a user of ESMValTool to run ESMValTool as quickly as possible by making the bare minimum number of changes.

How do I load and check the ESMValTool environment?

For this quickstart guide, an assumption is made that ESMValTool has already been installed at the site where ESMValTool will be run. If this is not the case, see the Installation episode in this tutorial.

Load the ESMValTool environment by following the instructions at ESMValTool: Pre-installed versions on HPC clusters / other servers.

Check the ESMValTool environment by accessing the help for ESMValTool:

esmvaltool --help

How do I configure ESMValTool?

Create the ESMValTool user configuration file (the file is written by default to

~/.esmvaltool/config-user.yml):esmvaltool config get_config_userEdit the ESMValTool user configuration file using your favourite text editor to uncomment the lines relating to the site where ESMValTool will be run.

For more details about the ESMValTool user configuration file see the Configuration episode in this tutorial.

How do I run a recipe?

Run the example Python recipe:

esmvaltool run examples/recipe_python.ymlWait for the recipe to complete. If the recipe completes successfully, the last line printed to screen at the end of the log will look something like:

YYYY-MM-DD HH:mm:SS, NNN UTC [NNNNN] INFO Run was successfulView the output of the recipe by opening the HTML file produced by ESMValTool (the location of this file is printed to screen near the end of the log):

YYYY-MM-DD HH:mm:SS, NNN UTC [NNNNN] INFO Wrote recipe output to: file:///$HOME/esmvaltool_output/recipe_python_<date>_<time>/index.htmlFor more details about running recipes see the Running your first recipe episode in this tutorial.

Key Points

The purpose of the quickstart guide is to enable a user of ESMValTool to run ESMValTool as quickly as possible without having to go through the whole tutorial

Use the

module loadcommand to load the ESMValTool environment, see the [Installation][lesson-installation] episode for more details and useesmvaltool --helpto check the ESMValTool environmentUse

esmvaltool config get_config_userto create the ESMValTool user configuration fileUse

esmvaltool run <recipe>.ymlto run a recipe

Installation

Overview

Teaching: 10 min

Exercises: 10 min

Compatibility:Questions

What are the prerequisites for installing ESMValTool?

How do I confirm that the installation was successful?

Objectives

Install ESMValTool

Demonstrate that the installation was successful

Overview

The instructions help with the installation of ESMValTool on operating systems like Linux/MacOSX/Windows. We use the Mamba package manager to install the ESMValTool. Other installation methods are also available; they can be found in the documentation. We will first install Mamba, and then ESMValTool. We end this chapter by testing that the installation was successful.

Before we begin, here are all the possible ways in which you can use ESMValTool depending on your level of expertise or involvement with ESMValTool and associated software such as GitHub and Mamba.

- If you have access to a server where ESMValTool is already installed

as a module, for e.g., the CEDA JASMIN

server, you can simply load the module with the following command:

module load esmvaltoolAfter loading

esmvaltool, we can start using ESMValTool right away. Please see the next lesson. - If you would like to install ESMValTool as a mamba package, then this lesson will tell you how!

- If you would like to start experimenting with existing diagnostics or contributing to ESMvalTool, please see the instructions for source installation in the lesson Development and contribution and in the documentation.

Install ESMValTool on Windows

ESMValTool does not directly support Windows, but successful usage has been reported through the Windows Subsystem for Linux(WSL), available in Windows 10. To install the WSL please follow the instructions on the Windows Documentation page. After installing the WSL, installation can be done using the same instructions for Linux/MacOSX.

Install ESMValTool on Linux/MacOSX

Install Mamba

ESMValTool is distributed using Mamba.

To install mamba on Linux or MacOSX, follow the instructions below:

-

Please download the installation file for the latest Mamba version here.

-

Next, run the installer from the place where you downloaded it:

On

Linux:bash Mambaforge-Linux-x86_64.shOn

MacOSX:bash Mambaforge-MacOSX-x86_64.sh -

Follow the instructions in the installer. The defaults should normally suffice.

-

You will need to restart your terminal for the changes to have effect.

-

We recommend updating mamba before the esmvaltool installation. To do so, run:

mamba update --name base mamba -

Verify you have a working mamba installation by:

which mambaThis should show the path to your mamba executable, e.g.

~/mambaforge/bin/mamba.

For more information about installing mamba, see the mamba installation documentation.

Install the ESMValTool package

The ESMValTool package contains diagnostics scripts in four languages: R, Python, Julia and NCL. This introduces a lot of dependencies, and therefore the installation can take quite long. It is, however, possible to install ‘subpackages’ for each of the languages. The following (sub)packages are available:

esmvaltool-pythonesmvaltool-nclesmvaltool-resmvaltool–> the complete package, i.e. the combination of the above.

For the tutorial, we will install the complete package. Thus, to install the ESMValTool package, run

mamba create --name esmvaltool esmvaltool

On MacOSX ESMValTool functionalities in Julia, NCL, and R are not supported. To install a Mamba environment on MacOSX, please refer to specific information.

This will create a new Mamba

environment

called esmvaltool, with the ESMValTool package and all of its dependencies

installed in it.

Common issues

You find a list of common installation problems and their solutions in the documentation.

Install Julia

Some ESMValTool diagnostics are written in the Julia programming language. If you want a full installation of ESMValTool including Julia diagnostics, you need to make sure Julia is installed before installing ESMValTool.

In this tutorial, we will not use Julia, but for reference, we have listed the steps to install Julia below. Complete instructions for installing Julia can be found on the Julia installation page.

Julia installation instructions

First, open a bash terminal and activate the newly created

esmvaltoolenvironment.conda activate esmvaltoolNext, to install Julia via

mamba, you can use the following command:mamba install juliaTo check that the Julia executable can be found, run

which juliato display the path to the Julia executable, it should be

~/mambaforge/envs/esmvaltool/bin/juliaTo test that Julia is installed correctly, run

juliato start the interactive Julia interpreter. Press

Ctrl+Dto exit.

Test that the installation was successful

To test that the installation was successful, run

conda activate esmvaltool

to activate the conda environment called esmvaltool. In the shell prompt the

active conda environment should have been changed from (base) to

(esmvaltool).

Next, run

esmvaltool --help

to display the command line help.

Version of ESMValTool

Can you figure out which version of ESMValTool has been installed?

Solution

The

esmvaltool --helpcommand listsversionas a command to get the versionWhen you run

esmvaltool versionThe version of ESMValTool installed should be displayed on the screen as:

ESMValCore: 2.8.0 ESMValTool: 2.8.0Note that on HPC servers such as JASMIN, sometimes a more recent development version may be displayed for ESMValTool, for e.g.

ESMValTool: 2.9.0.dev4+g6948d5512

Key Points

All the required packages can be installed using mamba.

You can find more information about installation in the documentation.

Configuration

Overview

Teaching: 10 min

Exercises: 10 min

Compatibility:Questions

What is the user configuration file and how should I use it?

Objectives

Understand the contents of the user-config.yml file

Prepare a personalized user-config.yml file

Configure ESMValTool to use some settings

The configuration file

For the purposes of this tutorial, we will create a directory in our home directory

called esmvaltool_tutorial and use that as our working directory. The following steps

should do that:

mkdir esmvaltool_tutorial

cd esmvaltool_tutorial

The config-user.yml configuration file contains all the global level

information needed by ESMValTool to run.

This is a YAML file.

You can get the default configuration file by running:

esmvaltool config get_config_user --path=<target_dir>

The default configuration file will be downloaded to the directory specified with

the --path variable. For instance, you can provide the path to your working directory

as the target_dir. If this option is not used, the file will be saved to the default

location: ~/.esmvaltool/config-user.yml, where ~ is the

path to your home directory. Note that files and directories starting with a

period are “hidden”, to see the .esmvaltool directory in the terminal use

ls -la ~. Note that if a configuration file by that name already exists in the default

location, the get_config_user command will not update the file as ESMValTool will not

overwrite the file. You will have to move the file first if you want an updated copy of the

user configuration file.

We run a text editor called nano to have a look inside the configuration file

and then modify it if needed:

nano ~/.esmvaltool/config-user.yml

Any other editor can be used, e.g.vim.

This file contains the information for:

- Output settings

- Destination directory

- Auxiliary data directory

- Number of tasks that can be run in parallel

- Rootpath to input data

- Directory structure for the data from different projects

Text editor side note

No matter what editor you use, you will need to know where it searches for and saves files. If you start it from the shell, it will (probably) use your current working directory as its default location. We use

nanoin examples here because it is one of the least complex text editors. Press ctrl + O to save the file, and then ctrl + X to exitnano.

Output settings

The configuration file starts with output settings that

inform ESMValTool about your preference for output.

You can turn on or off the setting by true or false

values. Most of these settings are fairly self-explanatory.

Saving preprocessed data

Later in this tutorial, we will want to look at the contents of the

preprocfolder. This folder contains preprocessed data and is removed by default when ESMValTool is run. In the configuration file, which settings can be modified to prevent this from happening?Solution

If the option

remove_preproc_diris set tofalse, then thepreproc/directory contains all the pre-processed data and the metadata interface files. If the optionsave_intermediary_cubesis set totruethen data will also be saved after each preprocessor step in the folderpreproc. Note that saving all intermediate results to file will result in a considerable slowdown, and can quickly fill your disk.

Destination directory

The destination directory is the rootpath where ESMValTool will store its output folders containing e.g. figures, data, logs, etc. With every run, ESMValTool automatically generates a new output folder determined by recipe name, and date and time using the format: YYYYMMDD_HHMMSS.

Set the destination directory

Let’s name our destination directory

esmvaltool_outputin the working directory. ESMValTool should write the output to this path, so make sure you have the disk space to write output to this directory. How do we set this in theconfig-user.yml?Solution

We use

output_direntry in theconfig-user.ymlfile as:output_dir: ./esmvaltool_outputIf the

esmvaltool_outputdoes not exist, ESMValTool will generate it for you.

Rootpath to input data

ESMValTool uses several categories (in ESMValTool, this is referred to as projects) for input data based on their source. The current categories in the configuration file are mentioned below. For example, CMIP is used for a dataset from the Climate Model Intercomparison Project whereas OBS may be used for an observational dataset. More information about the projects used in ESMValTool is available in the documentation. When using ESMValTool on your own machine, you can create a directory to download climate model data or observation data sets and let the tool use data from there. It is also possible to ask ESMValTool to download climate model data as needed. This can be done by specifying a download directory and by setting the option to download data as shown below.

# Directory for storing downloaded climate data

download_dir: ~/climate_data

search_esgf: always

If you are working offline or do not want to download the data then set the

option above to never. If you want to download data only when the necessary files

are missing at the usual location, you can set the option to when_missing.

The rootpath specifies the directories where ESMValTool will look for input data.

For each category, you can define either one path or several paths as a list. For example:

rootpath:

CMIP5: [~/cmip5_inputpath1, ~/cmip5_inputpath2]

OBS: ~/obs_inputpath

RAWOBS: ~/rawobs_inputpath

default: ~/climate_data

These are typically available in the default configuration file you downloaded, so simply removing the machine specific lines should be sufficient to access input data.

Set the correct rootpath

In this tutorial, we will work with data from CMIP5 and CMIP6. How can we modify the

rootpathto make sure the data path is set correctly for both CMIP5 and CMIP6? Note: to get the data, check the instructions in Setup.Solution

- Are you working on your own local machine? You need to add the root path of the folder where the data is available to the

config-user.ymlfile as:rootpath: ... CMIP5: ~/esmvaltool_tutorial/data CMIP6: ~/esmvaltool_tutorial/data

- Are you working on your local machine and have downloaded data using ESMValTool? You need to add the root path of the folder where the data has been downloaded to as specified in the

download_dir.rootpath: ... CMIP5: ~/climate_data CMIP6: ~/climate_data

- Are you working on a computer cluster like Jasmin or DKRZ? Site-specific path to the data for JASMIN/DKRZ/ETH/IPSL are already listed at the end of the

config-user.ymlfile. You need to uncomment the related lines. For example, on JASMIN:auxiliary_data_dir: /gws/nopw/j04/esmeval/aux_data/AUX rootpath: CMIP6: /badc/cmip6/data/CMIP6 CMIP5: /badc/cmip5/data/cmip5/output1 OBS: /gws/nopw/j04/esmeval/obsdata-v2 OBS6: /gws/nopw/j04/esmeval/obsdata-v2 obs4MIPs: /gws/nopw/j04/esmeval/obsdata-v2 ana4mips: /gws/nopw/j04/esmeval/obsdata-v2 default: /gws/nopw/j04/esmeval/obsdata-v2

- For more information about setting the rootpath, see also the ESMValTool documentation.

Directory structure for the data from different projects

Input data can be from various models, observations and reanalysis data that

adhere to the CF/CMOR standard. The drs setting

describes the file structure.

The drs setting describes the file structure for several projects (e.g.

CMIP6, CMIP5, obs4mips, OBS6, OBS) on several key machines

(e.g. BADC, CP4CDS, DKRZ, ETHZ, SMHI, BSC). For more

information about drs, you can visit the ESMValTool documentation on

Data Reference Syntax (DRS).

Set the correct drs

In this lesson, we will work with data from CMIP5 and CMIP6. How can we set the correct

drs?Solution

- Are you working on your own local machine? You need to set the

drsof the data in theconfig-user.ymlfile as:drs: CMIP5: default CMIP6: default- Are you asking ESMValTool to download the data for use with your diagnostics? You need to set the

drsof the data in theconfig-user.ymlfile as:drs: CMIP5: ESGF CMIP6: ESGF CORDEX: ESGF obs4MIPs: ESGF- Are you working on a computer cluster like Jasmin or DKRZ? Site-specific

drsof the data are already listed at the end of theconfig-user.ymlfile. You need to uncomment the related lines. For example, on Jasmin:# Site-specific entries: Jasmin # Uncomment the lines below to locate data on JASMIN drs: CMIP6: BADC CMIP5: BADC OBS: default OBS6: default obs4mips: default ana4mips: default

Explain the default drs (if working on local machine)

- In the previous exercise, we set the

drsof CMIP5 data todefault. Can you explain why?- Have a look at the directory structure of the

OBSdata. There is a folder calledTier1. What does it mean?Solution

drs: defaultis one way to retrieve data from a ROOT directory that has no DRS-like structure.defaultindicates that all the files are in a folder without any structure.Observational data are organized in Tiers depending on their level of public availability. Therefore the default directory must be structured accordingly with sub-directories

TierXe.g. Tier1, Tier2 or Tier3, even whendrs: default. More details can be found in the documentation.

Other settings

Auxiliary data directory

The

auxiliary_data_dirsetting is the path where any required additional auxiliary data files are stored. This location allows us to tell the diagnostic script where to find the files if they can not be downloaded at runtime. This option should not be used for model or observational datasets, but for data files (e.g. shape files) used in plotting such as coastline descriptions and if you want to feed some additional data (e.g. shape files) to your recipe.auxiliary_data_dir: ~/auxiliary_dataSee more information in ESMValTool document.

Number of parallel tasks

This option enables you to perform parallel processing. You can choose the number of tasks in parallel as 1/2/3/4/… or you can set it to

null. That tells ESMValTool to use the maximum number of available CPUs. For the purpose of the tutorial, please set ESMValTool use only 1 cpu:max_parallel_tasks: 1In general, if you run out of memory, try setting

max_parallel_tasksto 1. Then, check the amount of memory you need for that by inspecting the filerun/resource_usage.txtin the output directory. Using the number there you can increase the number of parallel tasks again to a reasonable number for the amount of memory available in your system.

Make your own configuration file

It is possible to have several configuration files with different purposes, for example: config-user_formalised_runs.yml, config-user_debugging.yml. In this case, you have to pass the path of your own configuration file as a command-line option when running the ESMValTool. We will learn how to do this in the next lesson.

Key Points

The

config-user.ymltells ESMValTool where to find input data.

output_dirdefines the destination directory.

rootpathdefines the root path of the data.

drsdefines the directory structure of the data.

Running your first recipe

Overview

Teaching: 15 min

Exercises: 15 min

Compatibility:Questions

How to run a recipe?

What happens when I run a recipe?

Objectives

Run an existing ESMValTool recipe

Examine the log information

Navigate the output created by ESMValTool

Make small adjustments to an existing recipe

This episode describes how ESMValTool recipes work, how to run a recipe and how to explore the recipe output. By the end of this episode, you should be able to run your first recipe, look at the recipe output, and make small modifications.

Running an existing recipe

The recipe format has briefly been introduced in the Introduction episode. To see all the recipes that are shipped with ESMValTool, type

esmvaltool recipes list

We will start by running examples/recipe_python.yml

esmvaltool run examples/recipe_python.yml

or if you have the user configuration file in your current directory then

esmvaltool run --config_file ./config-user.yml examples/recipe_python.yml

If everything is okay, you should see that ESMValTool is printing a lot of output to the command line. The final message should be “Run was successful”. The exact output varies depending on your machine, but it should look something like the example log output on terminal below.

Example output

2023-05-01 20:59:33,345 UTC [18776] INFO ______________________________________________________________________ _____ ____ __ ____ __ _ _____ _ | ____/ ___|| \/ \ \ / /_ _| |_ _|__ ___ | | | _| \___ \| |\/| |\ \ / / _` | | | |/ _ \ / _ \| | | |___ ___) | | | | \ V / (_| | | | | (_) | (_) | | |_____|____/|_| |_| \_/ \__,_|_| |_|\___/ \___/|_| ______________________________________________________________________ ESMValTool - Earth System Model Evaluation Tool. http://www.esmvaltool.org CORE DEVELOPMENT TEAM AND CONTACTS: Birgit Hassler (Co-PI; DLR, Germany - birgit.hassler@dlr.de) Alistair Sellar (Co-PI; Met Office, UK - alistair.sellar@metoffice.gov.uk) Bouwe Andela (Netherlands eScience Center, The Netherlands - b.andela@esciencecenter.nl) Lee de Mora (PML, UK - ledm@pml.ac.uk) Niels Drost (Netherlands eScience Center, The Netherlands - n.drost@esciencecenter.nl) Veronika Eyring (DLR, Germany - veronika.eyring@dlr.de) Bettina Gier (UBremen, Germany - gier@uni-bremen.de) Remi Kazeroni (DLR, Germany - remi.kazeroni@dlr.de) Nikolay Koldunov (AWI, Germany - nikolay.koldunov@awi.de) Axel Lauer (DLR, Germany - axel.lauer@dlr.de) Saskia Loosveldt-Tomas (BSC, Spain - saskia.loosveldt@bsc.es) Ruth Lorenz (ETH Zurich, Switzerland - ruth.lorenz@env.ethz.ch) Benjamin Mueller (LMU, Germany - b.mueller@iggf.geo.uni-muenchen.de) Valeriu Predoi (URead, UK - valeriu.predoi@ncas.ac.uk) Mattia Righi (DLR, Germany - mattia.righi@dlr.de) Manuel Schlund (DLR, Germany - manuel.schlund@dlr.de) Breixo Solino Fernandez (DLR, Germany - breixo.solinofernandez@dlr.de) Javier Vegas-Regidor (BSC, Spain - javier.vegas@bsc.es) Klaus Zimmermann (SMHI, Sweden - klaus.zimmermann@smhi.se) For further help, please read the documentation at http://docs.esmvaltool.org. Have fun! 2023-05-01 20:59:33,345 UTC [18776] INFO Package versions 2023-05-01 20:59:33,345 UTC [18776] INFO ---------------- 2023-05-01 20:59:33,345 UTC [18776] INFO ESMValCore: 2.8.0 2023-05-01 20:59:33,346 UTC [18776] INFO ESMValTool: 2.9.0.dev4+g6948d5512 2023-05-01 20:59:33,346 UTC [18776] INFO ---------------- 2023-05-01 20:59:33,346 UTC [18776] INFO Using config file /home/users/username/esmvaltool_tutorial/config-user.yml 2023-05-01 20:59:33,346 UTC [18776] INFO Writing program log files to: /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/main_log.txt /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/main_log_debug.txt 2023-05-01 20:59:35,209 UTC [18776] INFO Starting the Earth System Model Evaluation Tool at time: 2023-05-01 20:59:35 UTC 2023-05-01 20:59:35,210 UTC [18776] INFO ---------------------------------------------------------------------- 2023-05-01 20:59:35,210 UTC [18776] INFO RECIPE = /apps/jasmin/community/esmvaltool/ESMValTool_2.8.0/esmvaltool/recipes/examples/recipe_python.yml 2023-05-01 20:59:35,210 UTC [18776] INFO RUNDIR = /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run 2023-05-01 20:59:35,211 UTC [18776] INFO WORKDIR = /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/work 2023-05-01 20:59:35,211 UTC [18776] INFO PREPROCDIR = /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/preproc 2023-05-01 20:59:35,211 UTC [18776] INFO PLOTDIR = /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/plots 2023-05-01 20:59:35,211 UTC [18776] INFO ---------------------------------------------------------------------- 2023-05-01 20:59:35,211 UTC [18776] INFO Running tasks using at most 8 processes 2023-05-01 20:59:35,211 UTC [18776] INFO If your system hangs during execution, it may not have enough memory for keeping this number of tasks in memory. 2023-05-01 20:59:35,211 UTC [18776] INFO If you experience memory problems, try reducing 'max_parallel_tasks' in your user configuration file. 2023-05-01 20:59:35,485 UTC [18776] INFO For Dataset: tas, Amon, CMIP6, BCC-ESM1, historical, r1i1p1f1, gn, supplementaries: areacella, *, *, *, *: ignoring supplementary variable 'areacella', unable to expand wildcards 'mip', 'exp', 'ensemble', 'activity', 'institute'. 2023-05-01 20:59:35,542 UTC [18776] INFO Creating tasks from recipe 2023-05-01 20:59:35,542 UTC [18776] INFO Creating tasks for diagnostic map 2023-05-01 20:59:35,542 UTC [18776] INFO Creating diagnostic task map/script1 2023-05-01 20:59:35,544 UTC [18776] INFO Creating preprocessor task map/tas 2023-05-01 20:59:35,544 UTC [18776] INFO Creating preprocessor 'to_degrees_c' task for variable 'tas' 2023-05-01 20:59:35,565 UTC [18776] INFO Found input files for Dataset: tas, Amon, CMIP6, BCC-ESM1, CMIP, historical, r1i1p1f1, gn, v20181214 2023-05-01 20:59:35,571 UTC [18776] INFO Found input files for Dataset: tas, Amon, CMIP5, bcc-csm1-1, historical, r1i1p1, v1 2023-05-01 20:59:35,573 UTC [18776] INFO PreprocessingTask map/tas created. 2023-05-01 20:59:35,574 UTC [18776] INFO Creating tasks for diagnostic timeseries 2023-05-01 20:59:35,574 UTC [18776] INFO Creating diagnostic task timeseries/script1 2023-05-01 20:59:35,574 UTC [18776] INFO Creating preprocessor task timeseries/tas_amsterdam 2023-05-01 20:59:35,574 UTC [18776] INFO Creating preprocessor 'annual_mean_amsterdam' task for variable 'tas_amsterdam' 2023-05-01 20:59:35,583 UTC [18776] INFO Found input files for Dataset: tas, Amon, CMIP6, BCC-ESM1, CMIP, historical, r1i1p1f1, gn, v20181214 2023-05-01 20:59:35,586 UTC [18776] INFO Found input files for Dataset: tas, Amon, CMIP5, bcc-csm1-1, historical, r1i1p1, v1 2023-05-01 20:59:35,588 UTC [18776] INFO PreprocessingTask timeseries/tas_amsterdam created. 2023-05-01 20:59:35,589 UTC [18776] INFO Creating preprocessor task timeseries/tas_global 2023-05-01 20:59:35,589 UTC [18776] INFO Creating preprocessor 'annual_mean_global' task for variable 'tas_global' 2023-05-01 20:59:35,589 UTC [18776] WARNING Preprocessor function area_statistics works best when at least one supplementary variable of ['areacella', 'areacello'] is defined in the recipe for Dataset: {'diagnostic': 'timeseries', 'variable_group': 'tas_global', 'dataset': 'BCC-ESM1', 'project': 'CMIP6', 'mip': 'Amon', 'short_name': 'tas', 'activity': 'CMIP', 'alias': 'CMIP6', 'caption': 'Annual global mean {long_name} according to {dataset}.', 'ensemble': 'r1i1p1f1', 'exp': 'historical', 'frequency': 'mon', 'grid': 'gn', 'institute': ['BCC'], 'long_name': 'Near-Surface Air Temperature', 'modeling_realm': ['atmos'], 'original_short_name': 'tas', 'preprocessor': 'annual_mean_global', 'recipe_dataset_index': 0, 'standard_name': 'air_temperature', 'timerange': '1850/2000', 'units': 'K'} session: 'recipe_python_20230501_205933'. 2023-05-01 20:59:35,598 UTC [18776] INFO Found input files for Dataset: tas, Amon, CMIP6, BCC-ESM1, CMIP, historical, r1i1p1f1, gn, v20181214 2023-05-01 20:59:35,603 UTC [18776] INFO Found input files for Dataset: tas, Amon, CMIP5, bcc-csm1-1, historical, r1i1p1, v1, supplementaries: areacella, fx, 1pctCO2, r0i0p0 2023-05-01 20:59:35,604 UTC [18776] INFO PreprocessingTask timeseries/tas_global created. 2023-05-01 20:59:35,605 UTC [18776] INFO These tasks will be executed: map/tas, timeseries/tas_global, timeseries/script1, map/script1, timeseries/tas_amsterdam 2023-05-01 20:59:35,623 UTC [18776] INFO Wrote recipe with version numbers and wildcards to: file:///home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/recipe_python_filled.yml 2023-05-01 20:59:35,623 UTC [18776] INFO Running 5 tasks using 5 processes 2023-05-01 20:59:35,682 UTC [18794] INFO Starting task map/tas in process [18794] 2023-05-01 20:59:35,682 UTC [18795] INFO Starting task timeseries/tas_amsterdam in process [18795] 2023-05-01 20:59:35,684 UTC [18796] INFO Starting task timeseries/tas_global in process [18796] 2023-05-01 20:59:35,772 UTC [18776] INFO Progress: 3 tasks running, 2 tasks waiting for ancestors, 0/5 done 2023-05-01 20:59:36,762 UTC [18795] INFO Extracting data for Amsterdam, Noord-Holland, Nederland (52.3730796 °N, 4.8924534 °E) 2023-05-01 20:59:37,685 UTC [18794] INFO Successfully completed task map/tas (priority 1) in 0:00:02.002836 2023-05-01 20:59:37,880 UTC [18776] INFO Progress: 2 tasks running, 2 tasks waiting for ancestors, 1/5 done 2023-05-01 20:59:37,892 UTC [18797] INFO Starting task map/script1 in process [18797] 2023-05-01 20:59:37,903 UTC [18797] INFO Running command ['/apps/jasmin/community/esmvaltool/miniconda3_py310_23.1.0/envs/esmvaltool/bin/python', '/apps/jasmin/community/esmvaltool/ESMValTool_2.8.0/esmvaltool/diag_scripts/examples/diagnostic.py', '/home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/map/script1/settings.yml'] 2023-05-01 20:59:37,904 UTC [18797] INFO Writing output to /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/work/map/script1 2023-05-01 20:59:37,904 UTC [18797] INFO Writing plots to /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/plots/map/script1 2023-05-01 20:59:37,904 UTC [18797] INFO Writing log to /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/map/script1/log.txt 2023-05-01 20:59:37,904 UTC [18797] INFO To re-run this diagnostic script, run: cd /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/map/script1; MPLBACKEND="Agg" /apps/jasmin/community/esmvaltool/miniconda3_py310_23.1.0/envs/esmvaltool/bin/python /apps/jasmin/community/esmvaltool/ESMValTool_2.8.0/esmvaltool/diag_scripts/examples/diagnostic.py /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/map/script1/settings.yml 2023-05-01 20:59:37,980 UTC [18776] INFO Progress: 3 tasks running, 1 tasks waiting for ancestors, 1/5 done 2023-05-01 20:59:38,992 UTC [18795] INFO Extracting data for Amsterdam, Noord-Holland, Nederland (52.3730796 °N, 4.8924534 °E) 2023-05-01 20:59:40,791 UTC [18795] INFO Generated PreprocessorFile: /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/preproc/timeseries/tas_amsterdam/MultiModelMean_historical_Amon_tas_1850-2000.nc 2023-05-01 20:59:41,059 UTC [18795] INFO Successfully completed task timeseries/tas_amsterdam (priority 3) in 0:00:05.375697 2023-05-01 20:59:41,189 UTC [18776] INFO Progress: 2 tasks running, 1 tasks waiting for ancestors, 2/5 done 2023-05-01 20:59:41,417 UTC [18796] INFO Successfully completed task timeseries/tas_global (priority 4) in 0:00:05.732484 2023-05-01 20:59:41,590 UTC [18776] INFO Progress: 1 tasks running, 1 tasks waiting for ancestors, 3/5 done 2023-05-01 20:59:41,602 UTC [18798] INFO Starting task timeseries/script1 in process [18798] 2023-05-01 20:59:41,611 UTC [18798] INFO Running command ['/apps/jasmin/community/esmvaltool/miniconda3_py310_23.1.0/envs/esmvaltool/bin/python', '/apps/jasmin/community/esmvaltool/ESMValTool_2.8.0/esmvaltool/diag_scripts/examples/diagnostic.py', '/home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/timeseries/script1/settings.yml'] 2023-05-01 20:59:41,612 UTC [18798] INFO Writing output to /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/work/timeseries/script1 2023-05-01 20:59:41,612 UTC [18798] INFO Writing plots to /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/plots/timeseries/script1 2023-05-01 20:59:41,612 UTC [18798] INFO Writing log to /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/timeseries/script1/log.txt 2023-05-01 20:59:41,612 UTC [18798] INFO To re-run this diagnostic script, run: cd /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/timeseries/script1; MPLBACKEND="Agg" /apps/jasmin/community/esmvaltool/miniconda3_py310_23.1.0/envs/esmvaltool/bin/python /apps/jasmin/community/esmvaltool/ESMValTool_2.8.0/esmvaltool/diag_scripts/examples/diagnostic.py /home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/timeseries/script1/settings.yml 2023-05-01 20:59:41,691 UTC [18776] INFO Progress: 2 tasks running, 0 tasks waiting for ancestors, 3/5 done 2023-05-01 20:59:46,069 UTC [18797] INFO Maximum memory used (estimate): 0.3 GB 2023-05-01 20:59:46,072 UTC [18797] INFO Sampled every second. It may be inaccurate if short but high spikes in memory consumption occur. 2023-05-01 20:59:47,192 UTC [18797] INFO Successfully completed task map/script1 (priority 0) in 0:00:09.298761 2023-05-01 20:59:47,306 UTC [18776] INFO Progress: 1 tasks running, 0 tasks waiting for ancestors, 4/5 done 2023-05-01 20:59:49,777 UTC [18798] INFO Maximum memory used (estimate): 0.3 GB 2023-05-01 20:59:49,780 UTC [18798] INFO Sampled every second. It may be inaccurate if short but high spikes in memory consumption occur. 2023-05-01 20:59:50,866 UTC [18798] INFO Successfully completed task timeseries/script1 (priority 2) in 0:00:09.263219 2023-05-01 20:59:50,914 UTC [18776] INFO Progress: 0 tasks running, 0 tasks waiting for ancestors, 5/5 done 2023-05-01 20:59:50,914 UTC [18776] INFO Successfully completed all tasks. 2023-05-01 20:59:50,959 UTC [18776] INFO Wrote recipe with version numbers and wildcards to: file:///home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/run/recipe_python_filled.yml 2023-05-01 20:59:51,233 UTC [18776] INFO Wrote recipe output to: file:///home/users/username/esmvaltool_tutorial/esmvaltool_output/recipe_python_20230501_205933/index.html 2023-05-01 20:59:51,233 UTC [18776] INFO Ending the Earth System Model Evaluation Tool at time: 2023-05-01 20:59:51 UTC 2023-05-01 20:59:51,233 UTC [18776] INFO Time for running the recipe was: 0:00:16.023887 2023-05-01 20:59:51,787 UTC [18776] INFO Maximum memory used (estimate): 1.7 GB 2023-05-01 20:59:51,788 UTC [18776] INFO Sampled every second. It may be inaccurate if short but high spikes in memory consumption occur. 2023-05-01 20:59:51,790 UTC [18776] INFO Removing `preproc` directory containing preprocessed data 2023-05-01 20:59:51,790 UTC [18776] INFO If this data is further needed, then set `remove_preproc_dir` to `false` in your user configuration file 2023-05-01 20:59:51,827 UTC [18776] INFO Run was successful

Pro tip: ESMValTool search paths

You might wonder how ESMValTool was able find the recipe file, even though it’s not in your working directory. All the recipe paths printed from

esmvaltool recipes listare relative to ESMValTool’s installation location. This is where ESMValTool will look if it cannot find the file by following the path from your working directory.

Investigating the log messages

Let’s dissect what’s happening here.

Output files and directories

After the banner and general information, the output starts with some important locations.

- Did ESMValTool use the right config file?

- What is the path to the example recipe?

- What is the main output folder generated by ESMValTool?

- Can you guess what the different output directories are for?

- ESMValTool creates two log files. What is the difference?

Answers

- The config file should be the one we edited in the previous episode, something like

/home/<username>/.esmvaltool/config-user.ymlor~/esmvaltool_tutorial/config-user.yml.- ESMValTool found the recipe in its installation directory, something like

/home/users/username/mambaforge/envs/esmvaltool/bin/esmvaltool/recipes/examples/or if you are using a pre-installed module on a server, something like/apps/jasmin/community/esmvaltool/ESMValTool_<version> /esmvaltool/recipes/examples/recipe_python.yml, where<version>is the latest release.- ESMValTool creates a time-stamped output directory for every run. In this case, it should be something like

recipe_python_YYYYMMDD_HHMMSS. This folder is made inside the output directory specified in the previous episode:~/esmvaltool_tutorial/esmvaltool_output.- There should be four output folders:

plots/: this is where output figures are stored.preproc/: this is where pre-processed data are stored.run/: this is where esmvaltool stores general information about the run, such as log messages and a copy of the recipe file.work/: this is where output files (not figures) are stored.- The log files are:

main_log.txtis a copy of the command-line outputmain_log_debug.txtcontains more detailed information that may be useful for debugging.

Debugging: No ‘preproc’ directory?

If you’re missing the preproc directory, then your

config-user.ymlfile has the valueremove_preproc_dirset totrue(this is used to save disk space). Please set this value tofalseand run the recipe again.

After the output locations, there are two main sections that can be distinguished in the log messages:

- Creating tasks

- Executing tasks

Analyse the tasks

List all the tasks that ESMValTool is executing for this recipe. Can you guess what this recipe does?

Answer

Just after all the ‘creating tasks’ and before ‘executing tasks’, we find the following line in the output:

[18776] INFO These tasks will be executed: map/tas, timeseries/tas_global, timeseries/script1, map/script1, timeseries/tas_amsterdamSo there are three tasks related to timeseries: global temperature, Amsterdam temperature, and a script (tas: near-surface air temperature). And then there are two tasks related to a map: something with temperature, and again a script.

Examining the recipe file

To get more insight into what is happening, we will have a look at the recipe file itself. Use the following command to copy the recipe to your working directory

esmvaltool recipes get examples/recipe_python.yml

Now you should see the recipe file in your working directory (type ls to

verify). Use the nano editor to open this file:

nano recipe_python.yml

For reference, you can also view the recipe by unfolding the box below.

recipe_python.yml

# ESMValTool # recipe_python.yml # # See https://docs.esmvaltool.org/en/latest/recipes/recipe_examples.html # for a description of this recipe. # # See https://docs.esmvaltool.org/projects/esmvalcore/en/latest/recipe/overview.html # for a description of the recipe format. --- documentation: description: | Example recipe that plots a map and timeseries of temperature. title: Recipe that runs an example diagnostic written in Python. authors: - andela_bouwe - righi_mattia maintainer: - schlund_manuel references: - acknow_project projects: - esmval - c3s-magic datasets: - {dataset: BCC-ESM1, project: CMIP6, exp: historical, ensemble: r1i1p1f1, grid: gn} - {dataset: bcc-csm1-1, project: CMIP5, exp: historical, ensemble: r1i1p1} preprocessors: # See https://docs.esmvaltool.org/projects/esmvalcore/en/latest/recipe/preprocessor.html # for a description of the preprocessor functions. to_degrees_c: convert_units: units: degrees_C annual_mean_amsterdam: extract_location: location: Amsterdam scheme: linear annual_statistics: operator: mean multi_model_statistics: statistics: - mean span: overlap convert_units: units: degrees_C annual_mean_global: area_statistics: operator: mean annual_statistics: operator: mean convert_units: units: degrees_C diagnostics: map: description: Global map of temperature in January 2000. themes: - phys realms: - atmos variables: tas: mip: Amon preprocessor: to_degrees_c timerange: 2000/P1M caption: | Global map of {long_name} in January 2000 according to {dataset}. scripts: script1: script: examples/diagnostic.py quickplot: plot_type: pcolormesh cmap: Reds timeseries: description: Annual mean temperature in Amsterdam and global mean since 1850. themes: - phys realms: - atmos variables: tas_amsterdam: short_name: tas mip: Amon preprocessor: annual_mean_amsterdam timerange: 1850/2000 caption: Annual mean {long_name} in Amsterdam according to {dataset}. tas_global: short_name: tas mip: Amon preprocessor: annual_mean_global timerange: 1850/2000 caption: Annual global mean {long_name} according to {dataset}. scripts: script1: script: examples/diagnostic.py quickplot: plot_type: plot

Do you recognize the basic recipe structure that was introduced in episode 1?

- Documentation with relevant (citation) information

- Datasets that should be analysed

- Preprocessors groups of common preprocessing steps

- Diagnostics scripts performing more specific evaluation steps

Analyse the recipe

Try to answer the following questions:

- Who wrote this recipe?

- Who should be approached if there is a problem with this recipe?

- How many datasets are analyzed?

- What does the preprocessor called

annual_mean_globaldo?- Which script is applied for the diagnostic called

map?- Can you link specific lines in the recipe to the tasks that we saw before?

- How is the location of the city specified?

- How is the temporal range of the data specified?

Answers

- The example recipe is written by Bouwe Andela and Mattia Righi.

- Manuel Schlund is listed as the maintainer of this recipe.

- Two datasets are analysed:

- CMIP6 data from the model BCC-ESM1

- CMIP5 data from the model bcc-csm1-1

- The preprocessor

annual_mean_globalcomputes an area mean as well as annual means- The diagnostic called

mapexecutes a script referred to asscript1. This is a python script namedexamples/diagnostic.py- There are two diagnostics:

mapandtimeseries. Under the diagnosticmapwe find two tasks:

- a preprocessor task called

tas, applying the preprocessor calledto_degrees_cto the variabletas.- a diagnostic task called

script1, applying the scriptexamples/diagnostic.pyto the preprocessed data (map/tas).Under the diagnostic

timeserieswe find three tasks:

- a preprocessor task called

tas_amsterdam, applying the preprocessor calledannual_mean_amsterdamto the variabletas.- a preprocessor task called

tas_global, applying the preprocessor calledannual_mean_globalto the variabletas.- a diagnostic task called

script1, applying the scriptexamples/diagnostic.pyto the preprocessed data (timeseries/tas_globalandtimeseries/tas_amsterdam).- The

extract_locationpreprocessor is used to get data for a specific location here. ESMValTool interpolates to the location based on the chosen scheme. Can you tell the scheme used here? For more ways to extract areas, see the Area operations page.- The

timerangetag is used to extract data from a specific time period here. The start time is01/01/2000and the span of time to calculate means is1 Monthgiven byP1M. For more options on how to specify time ranges, see the timerange documentation.

Pro tip: short names and variable groups

The preprocessor tasks in ESMValTool are called ‘variable groups’. For the diagnostic

timeseries, we have two variable groups:tas_amsterdamandtas_global. Both of them operate on the variabletas(as indicated by theshort_name), but they apply different preprocessors. For the diagnosticmapthe variable group itself is namedtas, and you’ll notice that we do not explicitly provide theshort_name. This is a shorthand built into ESMValTool.

Output files

Have another look at the output directory created by the ESMValTool run.

Which files/folders are created by each task?

Answer

- map/tas: creates

/preproc/map/tas, which contains preprocessed data for each of the input datasets, a file calledmetadata.ymldescribing the contents of these datasets and provenance information in the form of.xmlfiles.- timeseries/tas_global: creates

/preproc/timeseries/tas_global, which contains preprocessed data for each of the input datasets, ametadata.ymlfile and provenance information in the form of.xmlfiles.- timeseries/tas_amsterdam: creates

/preproc/timeseries/tas_amsterdam, which contains preprocessed data for each of the input datasets, plus a combinedMultiModelMean, ametadata.ymlfile and provenance files.- map/script1: creates

/run/map/script1with general information and a log of the diagnostic script run. It also creates/plots/map/script1and/work/map/script1, which contain output figures and output datasets, respectively. For each output file, there is also corresponding provenance information in the form of.xml,.bibtexand.txtfiles.- timeseries/script1: creates

/run/timeseries/script1with general information and a log of the diagnostic script run. It also creates/plots/timeseries/script1and/work/timeseries/script1, which contain output figures and output datasets, respectively. For each output file, there is also corresponding provenance information in the form of.xml,.bibtexand.txtfiles.

Pro tip: diagnostic logs

When you run ESMValTool, any log messages from the diagnostic script are not printed on the terminal. But they are written to the

log.txtfiles in the folder/run/<diag_name>/log.txt.ESMValTool does print a command that can be used to re-run a diagnostic script. When you use this the output will be printed to the command line.

Modifying the example recipe

Let’s make a small modification to the example recipe. Notice that now that you have copied and edited the recipe, you can use

esmvaltool run recipe_python.yml

to refer to your local file rather than the default version shipped with ESMValTool.

Change your location

Modify and run the recipe to analyse the temperature for your own location.

Solution

In principle, you only have to modify the location in the preprocessor called

annual_mean_amsterdam. However, it is good practice to also replace all instances ofamsterdamwith the correct name of your location. Otherwise the log messages and output will be confusing. You are free to modify the names of preprocessors or diagnostics.In the

difffile below you will see the changes we have made to the file. The top 2 lines are the filenames and the lines like@@ -39,9 +39,9 @@represent the line numbers in the original and modified file, respectively. For more info on this format, see here.--- recipe_python.yml +++ recipe_python_london.yml @@ -39,9 +39,9 @@ convert_units: units: degrees_C - annual_mean_amsterdam: + annual_mean_london: extract_location: - location: Amsterdam + location: London scheme: linear annual_statistics: operator: mean @@ -83,7 +83,7 @@ cmap: Reds timeseries: - description: Annual mean temperature in Amsterdam and global mean since 1850. + description: Annual mean temperature in London and global mean since 1850. themes: - phys realms: @@ -92,9 +92,9 @@ tas_amsterdam: short_name: tas mip: Amon - preprocessor: annual_mean_amsterdam + preprocessor: annual_mean_london timerange: 1850/2000 - caption: Annual mean {long_name} in Amsterdam according to {dataset}. + caption: Annual mean {long_name} in London according to {dataset}. tas_global: short_name: tas mip: Amon

Key Points

ESMValTool recipes work ‘out of the box’ (if input data is available)

There are strong links between the recipe, log file, and output folders

Recipes can easily be modified to re-use existing code for your own use case

Conclusion of the basic tutorial

Overview

Teaching: 10 min

Exercises: 0 min

Compatibility:Questions

What do I do now?

Where can I get help?

What if I find a bug?

Where can I find more information about ESMValtool?

How can I cite ESMValtool?

Objectives

Breathe - you’re finished now!

Congratulations & Thanks!

Find out about the mini-tutorials, and what to do next.

Congratulations!

Congratulations on completing the ESMValTool tutorial! You should be now ready to go and start using ESMValTool independently.

The rest of this tutorial contains individual mini-tutorials to help work through a specific issue (not developed yet).

What next?

From here, there are lots of ways that you can continue to use ESMValTool.

- You can start from the list of existing recipes and run one of those.

- You can learn how to write your own diagnostics and recipes.

- You can contribute your recipe and diagnostics back into ESMValTool.

- You can learn how to prepare observational datasets to be suitable for use by ESMValTool.

Exercise: What do you want to do next?

- Think about what you want to do with ESMValTool.

- Decide what datasets and variables you want to use.

- Is any observational data available?

- How will you preprocess the data?

- What will your diagnostic script need to do?

- What will your final figure show?

Where can I get more information on ESMValTool?

Additional resources:

Where can I get more help?

There are lots of resources available to assist you in using ESMValTool.

The ESMValTool Discussions page is a good place to find information on general issues, or check if your question has already been addressed. If you have a GitHub account, you can also post your questions there.

If you encounter difficulties, a great starting point is to visit issues page issue page to check whether your issues have already been reported or not. If they have been reported before, suggestions provided by developers can help you to solve the issues you encountered. Note that you will need a GitHub account for this.

Additionally, there is an ESMValTool email list. Please see information on how to subscribe to the user mailing list.

What if I find a bug?

If you find a bug, please report it to the ESMValTool team. This will help us fix issues, ensuring not only your uninterrupted workflow but also contributing to the overall stability of ESMValTool for all users.

To report a bug, please create a new issue using the issue page.

In your bug report, please describe the problem as clearly and as completely as possible. You may need to include a recipe or the output log as well.

How do I cite the Tutorial?

Please use citation information available at https://doi.org/10.5281/zenodo.3974591.

Key Points

Individual mini-tutorials help work through a specific issue (not developed yet).

We are constantly improving this tutorial.

Writing your own recipe

Overview

Teaching: 15 min

Exercises: 30 min

Compatibility:Questions

How do I create a new recipe?

Can I use different preprocessors for different variables?

Can I use different datasets for different variables?

How can I combine different preprocessor functions?

Objectives

Create a recipe with multiple preprocessors

Use different preprocessors for different variables

Run a recipe with variables from different datasets

Introduction

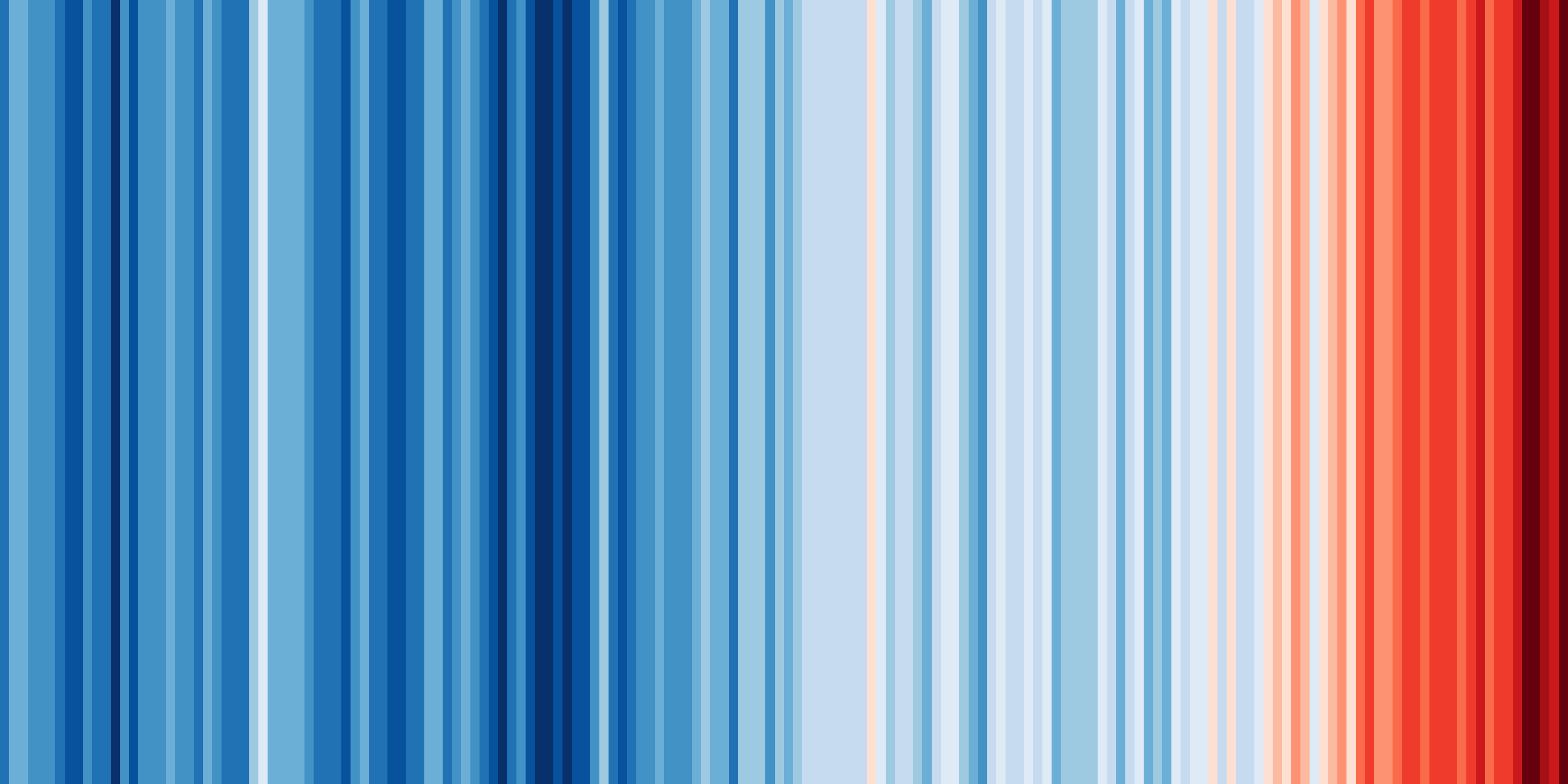

One of the key strenghts of ESMValTool is in making complex analyses reusable and reproducible. But that doesn’t mean everything in ESMValTool needs to be complex. Sometimes, the biggest challenge is in keeping things simple. You probably know the ‘warming stripes’ visualization by Professor Ed Hawkins. On the site https://showyourstripes.info you can find the same visualization for many regions in the world.

Shared by Ed Hawkins under a

Creative Commons 4.0 Attribution International licence. Source:

https://showyourstripes.info

Shared by Ed Hawkins under a

Creative Commons 4.0 Attribution International licence. Source:

https://showyourstripes.info

In this episode, we will reproduce and extend this functionality with ESMValTool. We have prepared a small Python script that takes a NetCDF file with timeseries data, and visualizes it in the form of our desired warming stripes figure.

You can find the diagnostic script that we will use

here (warming_stripes.py).

Download the file and store it in your working directory. If you want, you may also have a look at the contents, but it is not necessary to follow along.

We will write an ESMValTool recipe that takes some data, performs the necessary preprocessing, and then runs our Python script.

Drawing up a plan

Previously, we saw that running ESMValTool executes a number of tasks. Write down what tasks we will need to execute in this episode and what each of these tasks does?

Answer

In this episode, we will need to do 2 tasks:

- A preprocessing task that converts the gridded temperature data to a timeseries of global temperature anomalies

- A diagnostic tasks that calls our Python script, taking our preprocessed timeseries data as input.

Building a recipe from scratch

The easiest way to make a new recipe is to start from an existing one, and modify it until it does exactly what you need. However, in this episode we will start from scratch. This forces us to think about all the steps. We will deal with common errors as they occur throughout the development.

Remember the basic structure of a recipe, and notice that each of them is extensively described in the documentation under the header “The recipe format”:

This is the first place to look for help if you get stuck.

Open a new file called recipe_warming_stripes.yml:

nano recipe_warming_stripes.yml

Let’s add the standard header comments (these do not do anything), and a first description.

# ESMValTool

# recipe_warming_stripes.yml

---

documentation:

description: Reproducing Ed Hawkins' warming stripes visualization

title: Reproducing Ed Hawkins' warming stripes visualization.

Notice that yaml always requires 2 spaces indentation between the different

levels. Pressing ctrl+o will save the file. Verify the filename at the bottom

and press enter. Then use ctrl+x to exit the editor.

We will try to run the recipe after every modification we make, to see if it (still) works.

esmvaltool run recipe_warming_stripes.yml

In this case, it gives an error. Below you see the last few lines of the error message.

...

Error validating data /home/user/esmvaltool_tutorial/recipe_warming_stripes.yml

with schema /home/user/mambaforge/envs/esmvaltool_tutorial/lib/python3.10

/site-packages/esmvalcore/recipe_schema.yml

documentation.authors: Required field missing

YYYY-MM-DD HH:mm:SS,NNN UTC [19451] INFO If you have a question or need help,

please start a new discussion on https://github.com/ESMValGroup/

ESMValTool/discussions

If you suspect this is a bug, please open an issue on

https://github.com/ESMValGroup/ESMValTool/issues

To make it easier to find out what the problem is, please consider attaching

the files run/recipe_*.yml and run/main_log_debug.txt from the output directory.

Here, ESMValTool is telling us that it is missing a required field, namely the authors. We see that ESMValTool always tries to validate the recipe at an early stage.

Let’s add some additional information to the recipe. Open the recipe file again, and add an authors section below the description. ESMValTool expects the authors as a list, like so:

authors:

- lastname_firstname

To bypass a number of similar error messages, add a minimal diagnostics section below the documentation. The file should now look like:

# ESMValTool

# recipe_warming_stripes.yml

---

documentation:

description: Reproducing Ed Hawkins' warming stripes visualization

title: Reproducing Ed Hawkins' warming stripes visualization.

authors:

- doe_john

diagnostics:

dummy_diagnostic_1:

scripts: null

This is the minimal recipe layout that is required by ESMValTool. If we now run the recipe again, you will probably see the following error:

ValueError: Tag 'doe_john' does not exist in section 'authors' of

/home/user/mambaforge/envs/esmvaltool_tutorial/python3.10/

site-packages/esmvaltool/config-references.yml

Pro tip: config-references.yml

The error message above points to a file named config-references.yml. This is where ESMValTool stores all its citation information. To add yourself as an author, add your name in the form

lastname_firstnamein alphabetical order following the existing entries, under the# Development teamcomment. See the List of authors section in the ESMValTool documentation for more information.

For now, let’s just use one of the existing references. Change the author field to

righi_mattia, who cannot receive enough credit for all the effort he put into

ESMValTool. If you now run the recipe again, you should see the final message

ERROR No tasks to run!

Although there is no actual error in the recipe, ESMValTool assumes you mistakenly left out a variable name to process and alerts you with this error message.

Adding a dataset entry

Let’s add a datasets section. We will reuse the same datasets that we used in previous episodes.

Filling in the dataset keys

Use the paths specified in the configuration file to explore the data directory, and look at the explanation of the dataset entry in the ESMValTool documentation. For both the datasets, write down the following properties:

- project

- variable (short name)

- CMIP table

- dataset (model name or obs/reanalysis dataset)

- experiment

- ensemble member

- grid

- start year

- end year

Answers

key file 1 file 2 project CMIP6 CMIP5 short name tas tas CMIP table Amon Amon dataset BCC-ESM1 CanESM2 experiment historical historical ensemble r1i1p1f1 r1i1p1 grid gn (native grid) N/A start year 1850 1850 end year 2014 2005 Note that the grid key is only required for CMIP6 data, and that the extent of the historical period has changed between CMIP5 and CMIP6.

We start with the BCC-ESM1 dataset and add a datasets section to the recipe,

listing a single dataset, as shown below. Note that key fields such

as mip or start_year are included in the datasets section here but are part

of the diagnostic section in the recipe example seen in

Running your first recipe.

datasets:

- {dataset: BCC-ESM1, project: CMIP6, mip: Amon, exp: historical,

ensemble: r1i1p1f1, grid: gn, start_year: 1850, end_year: 2014}

The recipe should run but produce the same message as in the previous case since we still have not included a variable to actually process. We have not included the short name of the variable in this dataset section because this allows us to reuse this dataset entry with different variable names later on. This is not really necessary for our simple use case, but it is common practice in ESMValTool.

Adding the preprocessor section

Above, we already described the preprocessing task that needs to convert the standard, gridded temperature data to a timeseries of temperature anomalies.

Defining the preprocessor

Have a look at the available preprocessors in the documentation. Write down

- Which preprocessor functions do you think we should use?

- What are the parameters that we can pass to these functions?

- What do you think should be the order of the preprocessors?

- A suitable name for the overall preprocessor

Solution

We need to calculate anomalies and global means. There is an

anomaliespreprocessor which needs a granularity, a reference period, and whether or not to standardize the data. The global means can be calculated with thearea_statisticspreprocessor, which takes an operator as argument (in our case we want to compute themean).The default order in which these preprocessors are applied can be seen here:

area_statisticscomes beforeanomalies. If you want to change this, you can use thecustom_orderpreprocessor. We will keep it like this.Let’s name our preprocessor

global_anomalies.

Add the following block to your recipe file:

preprocessors:

global_anomalies:

area_statistics:

operator: mean

anomalies:

period: month

reference:

start_year: 1981

start_month: 1

start_day: 1

end_year: 2010

end_month: 12

end_day: 31

standardize: false

Completing the diagnostics section

Now we are ready to finish our diagnostics section. Remember that we want to make two tasks: a preprocessor task, and a diagnostic task. To illustrate that we can also pass settings to the diagnostic script, we add the option to specify a custom colormap.

Fill in the blanks

Extend the diagnostics section in your recipe by filling in the blanks in the following template:

diagnostics: <... (suitable name for our diagnostic)>: description: <...> variables: <... (suitable name for the preprocessed variable)>: short_name: <...> preprocessor: <...> scripts: <... (suitable name for our python script)>: script: <full path to python script> colormap: <... choose from matplotlib colormaps>Solution

diagnostics: diagnostic_warming_stripes: description: visualize global temperature anomalies as warming stripes variables: global_temperature_anomalies_global: short_name: tas preprocessor: global_anomalies scripts: warming_stripes_script: script: ~/esmvaltool_tutorial/warming_stripes.py colormap: 'bwr'

Now you should be able to run the recipe to get your own warming stripes.

Note: for the purpose of simplicity in this episode, we have not added logging or provenance tracking in the diagnostic script. Once you start to develop your own diagnostic scripts and want to add them to the ESMValTool repositories, this will be required. However, writing your own diagnostic script is beyond the scope of the basic tutorial.

Bonus exercises

Below are a couple of exercise to practice modifying the recipe. For your reference, here’s a copy of the recipe at this point. This will be the point of departure for each of the modifications we’ll make below.

Specific location

On showyourstripes.org, you can download stripes for specific locations. We will reproduce this possibility. Look at the available preprocessors in the documentation, and replace the global mean with a suitable alternative.

Solution

You could have used

extract_pointorextract_region. We usedextract_point. Here’s a copy of the recipe at this point and this is the difference from the previous recipe:--- recipe_warming_stripes.yml +++ recipe_warming_stripes_local.yml @@ -10,9 +10,11 @@ - {dataset: BCC-ESM1, project: CMIP6, mip: Amon, exp: historical, ensemble: r1i1p1f1, grid: gn, start_year: 1850, end_year: 2014} preprocessors: - global_anomalies: - area_statistics: - operator: mean + anomalies_amsterdam: + extract_point: + latitude: 52.379189 + longitude: 4.899431 + scheme: linear anomalies: period: month reference: @@ -27,9 +29,9 @@ diagnostics: diagnostic_warming_stripes: variables: - global_temperature_anomalies: + temperature_anomalies_amsterdam: short_name: tas - preprocessor: global_anomalies + preprocessor: anomalies_amsterdam scripts: warming_stripes_script: script: ~/esmvaltool_tutorial/warming_stripes.py

Different periods

Split the diagnostic in 2: the second one should use a different period. You’re free to choose the periods yourself. For example, 1 could be ‘recent’, the other ‘20th_century’. For this, you’ll have to add a new variable group.

Solution

Here’s a copy of the recipe at this point and this is the difference with the previous recipe:

--- recipe_warming_stripes_local.yml +++ recipe_warming_stripes_periods.yml @@ -7,7 +7,7 @@ - righi_mattia datasets: - - {dataset: BCC-ESM1, project: CMIP6, mip: Amon, exp: historical, - ensemble: r1i1p1f1, grid: gn, start_year: 1850, end_year: 2014} + - {dataset: BCC-ESM1, project: CMIP6, mip: Amon, exp: historical, + ensemble: r1i1p1f1, grid: gn} preprocessors: anomalies_amsterdam: @@ -29,9 +29,16 @@ diagnostics: diagnostic_warming_stripes: variables: - temperature_anomalies_amsterdam: + temperature_anomalies_recent: short_name: tas preprocessor: anomalies_amsterdam + start_year: 1950 + end_year: 2014 + temperature_anomalies_20th_century: + short_name: tas + preprocessor: anomalies_amsterdam + start_year: 1900 + end_year: 1999 scripts: warming_stripes_script: script: ~/esmvaltool_tutorial/warming_stripes.py

Different preprocessors

Now that you have different variable groups, we can also use different preprocessors. Add a second preprocessor to add another location of your choosing.

Pro-tip: if you want to avoid repetition, you can use YAML anchors.

Solution

Here’s a copy of the recipe at this point and this is the difference with the previous recipe:

--- recipe_warming_stripes_periods.yml +++ recipe_warming_stripes_multiple_locations.yml @@ -15,7 +15,7 @@ latitude: 52.379189 longitude: 4.899431 scheme: linear - anomalies: + anomalies: &anomalies period: month reference: start_year: 1981 @@ -25,18 +25,24 @@ end_month: 12 end_day: 31 standardize: false + anomalies_london: + extract_point: + latitude: 51.5074 + longitude: 0.1278 + scheme: linear + anomalies: *anomalies diagnostics: diagnostic_warming_stripes: variables: - temperature_anomalies_recent: + temperature_anomalies_recent_amsterdam: short_name: tas preprocessor: anomalies_amsterdam start_year: 1950 end_year: 2014 - temperature_anomalies_20th_century: + temperature_anomalies_20th_century_london: short_name: tas - preprocessor: anomalies_amsterdam + preprocessor: anomalies_london start_year: 1900 end_year: 1999 scripts:

Additional datasets

So far we have defined the datasets in the datasets section of the recipe. However, it’s also possible to add specific datasets only for specific variable groups. Look at the documentation to learn about the ‘additional_datasets’ keyword, and add a second dataset only for one of the variable groups.

Solution

Here’s a copy of the recipe at this point and this is the difference with the previous recipe:

--- recipe_warming_stripes_multiple_locations.yml +++ recipe_warming_stripes_additional_datasets.yml @@ -45,6 +45,8 @@ preprocessor: anomalies_london start_year: 1900 end_year: 1999 + additional_datasets: + - {dataset: CanESM2, project: CMIP5, mip: Amon, exp: historical, ensemble: r1i1p1} scripts: warming_stripes_script: script: ~/esmvaltool_tutorial/warming_stripes.py

Key Points

A recipe can work with different preprocessors at the same time.

The setting

additional_datasetscan be used to add a different dataset.Variable groups are useful for defining different settings for different variables.

Development and contribution

Overview

Teaching: 10 min

Exercises: 20 min

Compatibility:Questions

What is a development installation?

How can I test new or improved code?

How can I incorporate my contributions into ESMValTool?

Objectives

Execute a successful ESMValTool installation from the source code.

Contribute to ESMValTool development.

We now know how ESMValTool works, but how do we develop it? ESMValTool is an open-source project in ESMValGroup. We can contribute to its development by:

- a new or updated recipe script, see lesson on Writing your own recipe

- a new or updated diagnostics script, see lesson on Writing your own diagnostic script

- a new or updated cmorizer script, see lesson on CMORization: Using observational datasets

- helping with reviewing process of pull requests, see ESMValTool documentation on Review of pull requests

In this lesson, we first show how to set up a development installation of ESMValTool so you can make changes or additions. We then explain how you can contribute these changes to the community.

Git knowledge

For this episode, you need some knowledge of Git. You can refresh your knowledge in the corresponding Git carpentries course.

Development installation

We’ll explore how ESMValTool can be installed it in a develop mode.

Even if you aren’t collaborating with the community, this installation is needed

to run your new codes with ESMValTool.

Let’s get started.

1 Source code

The ESMValTool source code is available on a public GitHub repository: https://github.com/ESMValGroup/ESMValTool. To obtain the code, there are two options:

- Download the code from the repository. A ZIP file called

ESMValTool-main.zipis downloaded. To continue the installation, unzip the file, move to theESMValTool-maindirectory and then follow the sequence of steps starting from the section on ESMValTool dependencies below. - Clone the repository if you want to contribute to the ESMValTool development:

git clone https://github.com/ESMValGroup/ESMValTool.git

This command will ask your GitHub username and a personal token as password. Please follow instructions on GitHub token authentication requirements to create a personal access token. Alternatively, you could generate a new SSH key and add it to your GitHub account. After the authentication, the output might look like:

Cloning into 'ESMValTool'...

remote: Enumerating objects: 163, done.

remote: Counting objects: 100% (163/163), done.

remote: Compressing objects: 100% (125/125), done.

remote: Total 95049 (delta 84), reused 76 (delta 30), pack-reused 94886

Receiving objects: 100% (95049/95049), 175.16 MiB | 5.48 MiB/s, done.

Resolving deltas: 100% (68808/68808), done.

Now, a folder called ESMValTool has been created in your working directory.

This folder contains the source code of the tool.

To continue the installation, we move into the ESMValTool directory:

cd ESMValTool

Note that the main branch is checked out by default.

We can see this if we run:

git status

On branch main

Your branch is up to date with 'origin/main'.

nothing to commit, working tree clean

2 ESMValTool dependencies

Please don’t forget if an esmvaltool environment is already created following the lesson Installation, we should choose another name for the new environment in this lesson.

ESMValTool now uses mamba instead of conda for the recommended installation.

For a minimal mamba installation, see section Install Mamba in lesson

Installation.

It is good practice to update the version of mamba and conda on your machine before setting up ESMValTool. This can be done as follows:

mamba update --name base mamba conda

To simplify the installation process, an environment file environment.yml is

provided in the ESMValTool directory. We create an environment by running:

mamba env create --name esmvaltool --file environment.yml

The environment is called esmvaltool by default.

If an esmvaltool environment is already created following the lesson

Installation,

we should choose another name for the new environment in this lesson by:

mamba env create --name a_new_name --file environment.yml

This will create a new conda environment and install ESMValTool (with all dependencies that are needed for development purposes) into it with a single command.

For more information see conda managing environments.

Now, we should activate the environment:

conda activate esmvaltool

where esmvaltool is the name of the environment (replace by a_new_name

in case another environment name was used).

3 ESMValTool installation

ESMValTool can be installed in a develop mode by running:

pip install --editable '.[develop]'

This will add the esmvaltool directory to the Python path in editable mode and

install the development dependencies. We should check if the installation

works properly. To do this, run the tool with:

esmvaltool --help

If the installation is successful, ESMValTool prints a help message to the console.

Checking the development installation

We can use the command

mamba listto list installed packages in theesmvaltoolenvironment. Use this command to check that ESMValTool is installed in adevelopmode.Tip: see the documentation on conda list.

Solution

Run:

mamba list esmvaltool# Name Version Build Channel esmvaltool 2.10.0.dev3+g2dbc2cfcc pypi_0 pypi

4 Updating ESMValTool

The main branch has the latest features of ESMValTool. Please make sure

that the source code on your machine is up-to-date. If you obtain the source

code using git clone as explained in step 1 Source code, you can run git pull

to update the source code. Then ESMValTool installation will be updated

with changes from the main branch.

Contribution

We have seen how to install ESMValTool in a develop mode.

Now, we try to contribute to its development. Let’s see how this can be achieved.

We first discuss our ideas in an

issue in ESMValTool repository.

This can avoid disappointment at a later stage, for example,

if more people are doing the same thing.

It also gives other people an early opportunity to provide input and suggestions,